%load_ext autoreload

%autoreload 2

import numpy as np

import pandas as pd

import seaborn as sns

from matplotlib import pyplot as plt

import sklearnThe autoreload extension is already loaded. To reload it, use:

%reload_ext autoreloadSally Liu

April 14, 2023

https://github.com/Sallyliubj/Sallyliubj.github.io/blob/main/posts/Unsupervised%20Learning%20with%20Linear%20Algebra/source.py

I completed Part 1 of the blog post: Image Compression with the Singular Value Decomposition

This blog post consists of 4 parts:

Access an RGB image from the internet by its URL, download it, and convert it to greyscale using the workflow shown above.

Write a function called svd_reconstruct that reconstructs an image from its singular value decomposition.

Perform an experiment in which I reconstruct my image with several different values of k.

(Optional)Implement and demonstrate two additional functionalities - compression factor and epsilon threshold - in my svd_reconstruct function.

Implement my SinguarValueDecomposition class:

Here is the look of the original image as well as its grayscale:

Here, it can be seen that, when k = 30, the compressed image is not very clear, but is distinguishable.

The different values of k may affect how clear the reconstructed image will be.

To see how the values of k will affect the clearity of the image, I perform an experiment in which I reconstruct my image with several different values of k:

The choice of k goes up until I can’t distinguish the reconstructed image from the original by eye

I also determine the amount of storage needed for my reconstruction as a fraction of the amount of storage needed for the original image

When k goes up to 100-150, the reconstructed image cannot be distinguishable from the original from eye.

Amount of storage:

The image of m x n size uses k singular values, then we need to store (m x k + n x k + k) numbers.

So the fraction of storage for my reconstruction would be (m x k + n x k + k) / (m x n).

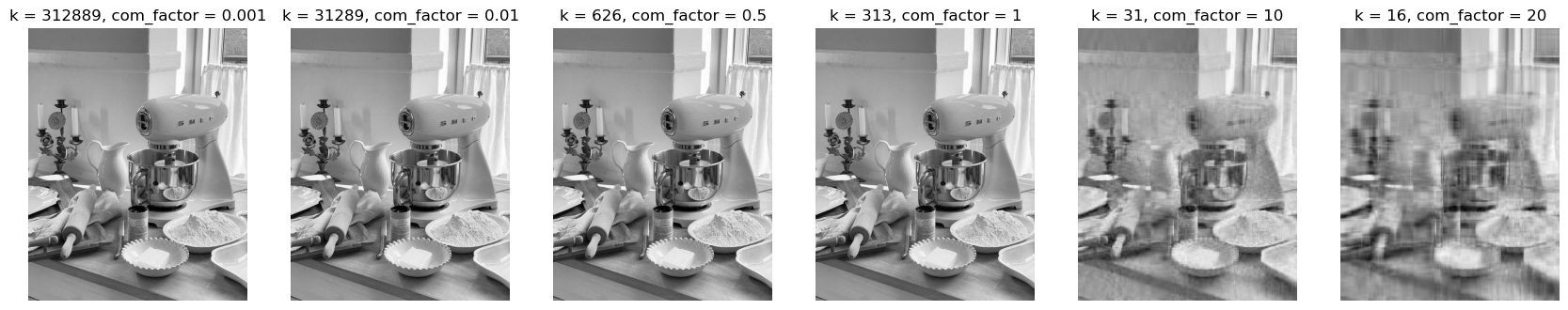

I allow the user to specify a desired compression factor and select the number of components k to use based on this selection.

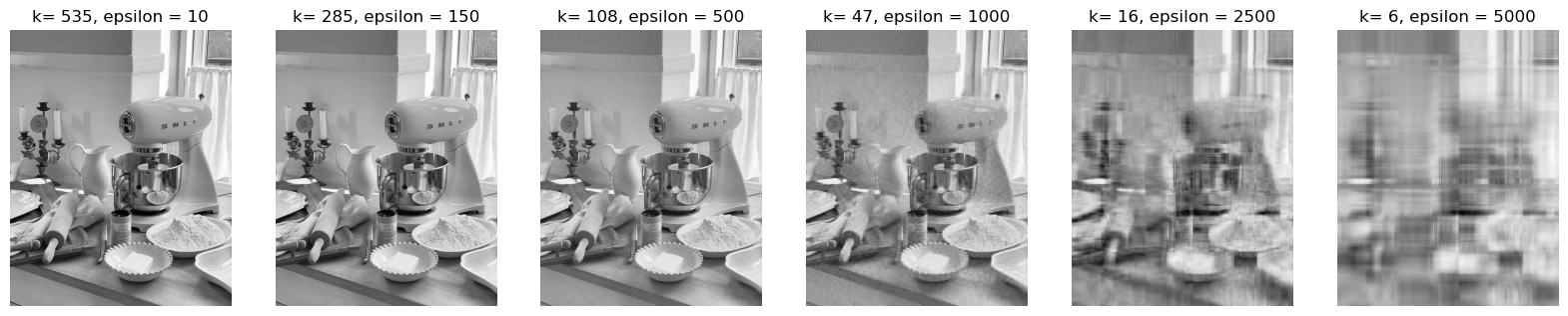

I allow the user to specify a desired threshold epsilon for the singular values. Then, only components for which the corresponding singular value is larger than epsilon are used.

fig, ax = plt.subplots(1,6, figsize=(21, 8))

curr_fig = 0

gray_img = svd.to_greyscale(img)

m,n = gray_img.shape

for c in [0.001,0.01,0.5,1,10,20]:

img1 = svd.svd_reconstruct(img, com_factor = c)

ax[curr_fig].imshow(img1, cmap = "Greys")

k = (m*n)/(c*(m+n+1))

k = round(k)

ax[curr_fig].set_title("k = "+ str(k)+", com_factor = "+ str(c))

ax[curr_fig].axis('off')

curr_fig += 1

plt.show()

Findings:

The smaller the compression factor is, the larger the value of k will be.

The image will have relatively high clearity when compression factor is less than 1.

fig, ax = plt.subplots(1,6, figsize=(20, 8))

curr_fig = 0

A = svd.to_greyscale(img)

m,n = A.shape

for e in [10,150,500,1000,2500,5000]:

img2 = svd.svd_reconstruct(img, epsilon = e)

ax[curr_fig].imshow(img2, cmap = "Greys")

U, sigma, V = np.linalg.svd(A)

D = np.zeros_like(A,dtype=float)

D[:min(A.shape),:min(A.shape)] = np.diag(sigma)

k = svd.threshold(e,D)

ax[curr_fig].set_title("k= "+ str(k)+", epsilon = "+ str(e))

ax[curr_fig].axis('off')

curr_fig += 1

plt.show()

Findings:

The larger the epsilon threshold is, the less k components will be chosen, so the image will have less clearity.

When the epsilon threshold is below 500-1000, the image will have relatively high clearity.